About High-Performance Computing at UR

Spydur, Spiderweb, and Arachne

Spydur

Spydur is the name of the University of Richmond’s High-Performance Computing environment, an environment that is available to all faculty and their students who do research.

UR purchased Spydur in 2021. It is a custom-built cluster computer to support the research done at Richmond. There are 30 compute nodes:

- 12 compute nodes with 52 Xeon cores and 384GB of memory each.

- 10 compute nodes with 52 Xeon cores and 768GB of memory each.

- 5 compute nodes with 52 Xeon cores and 1536GB of memory each.

- 2 compute nodes that support scientific computing with A40 GPUs.

- 1 compute node configured for AI and ML computing with A100 GPUs.

A total of 1508 Xeon cores and 20TB of memory.

Storage is provided by 336TB disc in a RAID 6 configuration, supporting a minimum of 2TB of fast SSD storage on each node.

Spydur runs the SLURM resource management software to schedule the work and allocate the available nodes fairly. More information about SLURM is available on the SLURM website.

The above nodes are grouped into named partitions.

- Basic: The default partition representing the nodes with 384GB of memory and no GPU.

- Medium: Compute nodes with 768GB of memory.

- Large: Compute nodes with 1536GB (1.5TB) of memory.

- ML: One node with two A100 GPUs.

- Sci: The two nodes with eight A40 GPUs each.

Additionally, we have “condo” nodes associated with UR faculty who have purchased nodes for their specific research requirements.

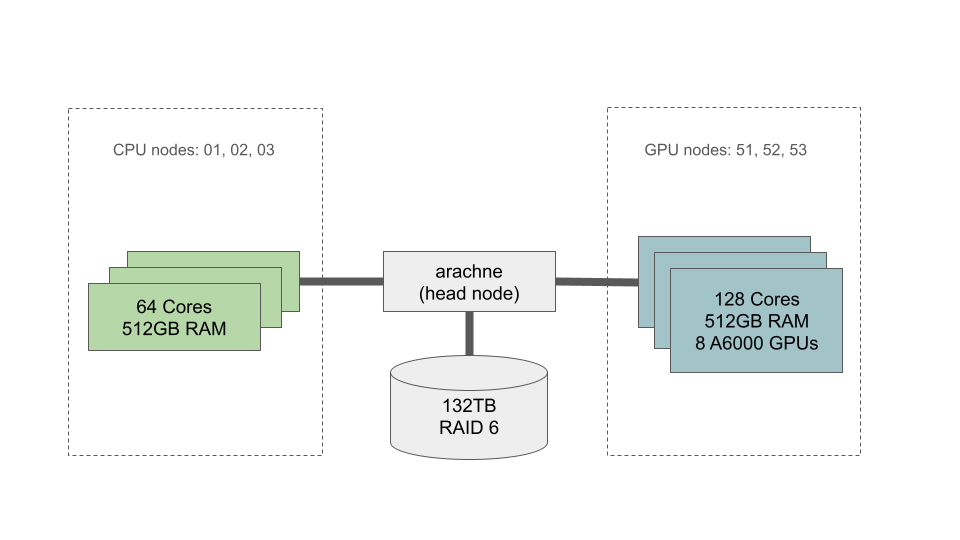

Spiderweb

Spiderweb is a large web server associated with HPC at UR. Many of the courses and programs in Data Analytics & Data Science use R Studio and Jupyter Notebooks, and these are available on-campus via Spiderweb. Faculty who want to use these pieces of software may request an account on Spiderweb.

Spiderweb is also used to distribute public research data to other universities. A disc array of more than 100TB is available for storage. Faculty who have data to distribute may make a request by sending an email to hpc@richmond.edu.

Major Equipment Available

The University of Richmond provides to its faculty and students a high-performance computing (HPC) environment that is supported by a Computational Support Specialist (see below). The HPC includes 30 shared compute nodes that were custom-built in 2021. Each node contains 52 Xeon cores (total cores = 1508) with node memory ranging from 384 GB to 1.5 TB. Two of the nodes have 8x Nvidia A40 GPUs and one node has 2x Nvidia A100 GPUs. In 2023, we are adding $250,000 in GPU hardware to the HPC; this will likely provide an additional 20-25 nodes configured with either Nvidia RTX-4090, RTX A6000 or H100 GPUs.

Support Personnel

The HPC hardware is maintained in the University’s climate-controlled Data Center with system administrative support provided by a Linux specialist (Sasko Stefanovski) from the University’s Information Services department. User management, software installation and research support for faculty is provided by a two full-time Computational Support Specialists (George Flanagin and Joao Tonini).